Systems: Difference between revisions

No edit summary |

No edit summary |

||

| (174 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Nazarbayev University High Performance Computing team currently operates three main facilities - Irgetas, Shabyt, and Muon. Below we provide a brief overview of them. | |||

Nazarbayev University | |||

== Irgetas cluster == | |||

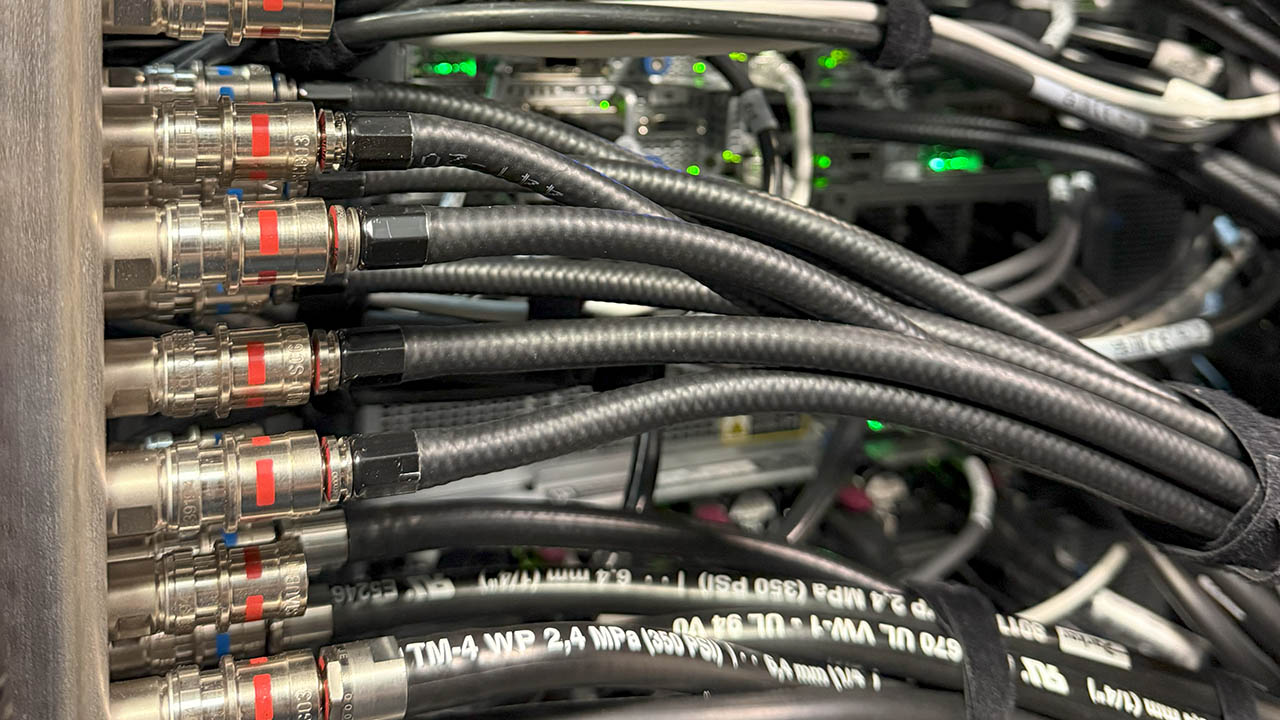

[[File:Irgetas_picture_1.jpg|420x420px|border]] [[File:Irgetas_picture_2.jpg|420x420px|border]] [[File:Irgetas_picture_3.jpg|420x420px|border]] | |||

=Shabyt cluster= | [[File:Irgetas_picture_4.jpg|420x420px|border]] [[File:Irgetas_picture_5.jpg|420x420px|border]] [[File:Irgetas_picture_6.jpg|420x420px|border]] | ||

[[File: | |||

Shabyt cluster | The Irgetas cluster is NU's most advanced computational facility on campus. It was deployed in September 2025 and features high compute density and efficiency enabled by direct liquid cooling. Manufactured by Hewlett Packard Enterprise (HPE), it has the following configuration: | ||

* 20 | |||

* 4 | *; 6 GPU compute nodes. Each GPU node features | ||

* 1 | *: Two AMD EPYC 9654 CPUs (96 cores / 192 threads, 2.4 GHz Base) | ||

* | *: Four Nvidia H100 SMX5 GPUs (80 GB HBM3) | ||

* | *: 768 GB DDR5-4800 RAM (12-channel) | ||

* | *: 1.92 TB local SSD scratch storage | ||

* 144 TB (raw) HPE MSA 2050 SAS HDD Array for large data storage | *: Two Infiniband NDR 400 Gbps network adapters (800 Gbps total) | ||

*: 25 Gbps SPF28 Ethernet network adapter | |||

*: Rocky Linux 9.6 | |||

*; 10 CPU compute nodes. Each CPU node features | |||

*: Two AMD EPYC 9684X CPUs (96 cores / 192 threads, 2.55 GHz Base, 1152 MB 3D V-Cache) | |||

*: 384 GB DDR5-4800 RAM (12-channel) | |||

*: 1.92 TB local SSD scratch storage | |||

*: Infiniband NDR 200 Gbps network adapter | |||

*: 25 Gbps SPF28 Ethernet network adapter | |||

*: Rocky Linux 9.6 | |||

*; 1 Interactive login node | |||

*: AMD EPYC 9684X (96 cores / 192 threads, 2.55 GHz Base, 1152 MB 3D V-Cache) | |||

*: 192 GB DDR5-4800 RAM (12-channel) | |||

*: 7.68 TB local SSD scratch storage | |||

*: Infiniband NDR 200 Gbps network adapter | |||

*: 25 Gbps SPF28 Ethernet network adapter | |||

*: Rocky Linux 9.6 | |||

*; 1 Management node | |||

*: AMD EPYC 9354 CPUs (32 cores / 64 threads, 3.25 GHz Base) | |||

*: 256 GB DDR5-4800 RAM (8-channel) | |||

*: 15.36 TB local SSD storage | |||

*: 25 Gbps SPF28 Ethernet network adapter | |||

*: Rocky Linux 9.6 | |||

*; NVMe SSD storage server for software and user home directories (/shared) | |||

*: Two AMD EPYC 9354 CPUs (32 cores / 64 threads, 3.25 GHz Base) | |||

*: 768 GB DDR5-4800 RAM (12-channel) | |||

*: 122 TB total raw capacity | |||

*: 92 TB total usable space in RAID 6 configuration | |||

*: Sustained sequential read speed from compute nodes > 80 GBps | |||

*: Sustained sequential write speed from compute nodes > 20 GBps | |||

*: Two Infiniband NDR 400 Gbps network adapters (800 Gbps total) | |||

*: 25 Gbps SPF28 Ethernet network adapter | |||

*: Rocky Linux 9.6 | |||

*; Nvidia Infiniband NDR Quantum-2 QM9700 managed switch (compute network) | |||

*: 64 ports (400 Gbps per port) | |||

*; HPE Aruba Networking CX 8325‑48Y8C 25G SFP/SFP+/SFP28 switch (application network) | |||

*: 48 ports (SFP28, 25 Gbps per port) | |||

*; HPE Aruba Networking 2930F 48G 4SFP+ switch (management network) | |||

*: 48 ports (1 Gbps per port) | |||

*; HPE Cray XD Direct liquid cooling system | |||

*: HPE Cray XD 75kW 208V FIO In-Rack Coolant Distribution Unit | |||

*: Three-chiller setup with BlueBox ZETA Rev HE FC 3.2 | |||

The system is assembled in a single rack and physically located in NU data center in Block 1. | |||

{| class="wikitable" | |||

|+Irgetas cluster theoretical peak performance | |||

!Subsystem | |||

!FP8 | |||

!FP16 | |||

!FP32 | |||

!FP64 | |||

|- | |||

|CPUs (total) | |||

| | |||

| | |||

|245.0 TFLOPS | |||

|122.5 TFLOPS | |||

|- | |||

|GPUs (total) | |||

|47,492 TFLOPS | |||

|23,746 TFLOPS | |||

|1,606 TFLOPS | |||

|803 TFLOPS | |||

|} | |||

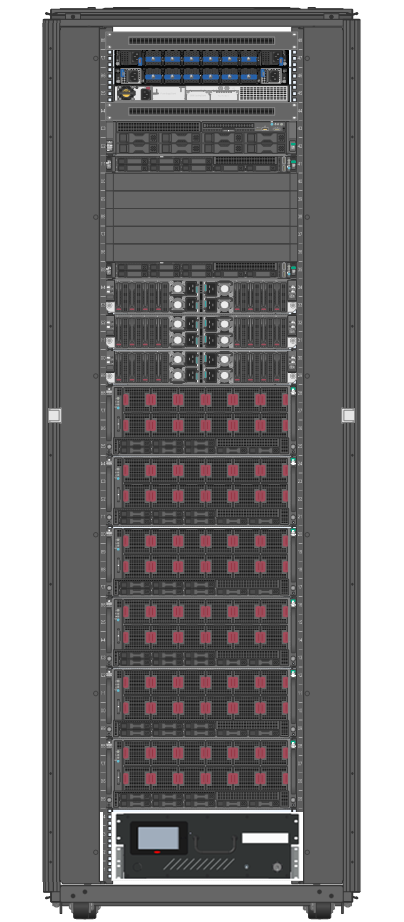

[[File:Irgetas_rack.png|frameless|496x496px]] | |||

<br> | |||

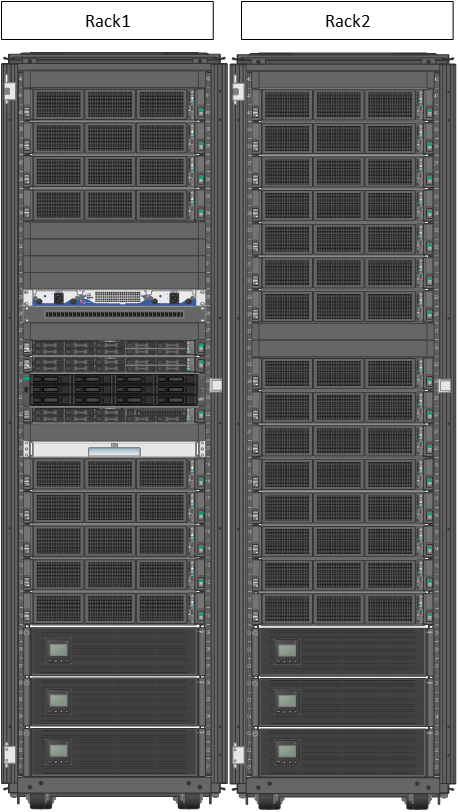

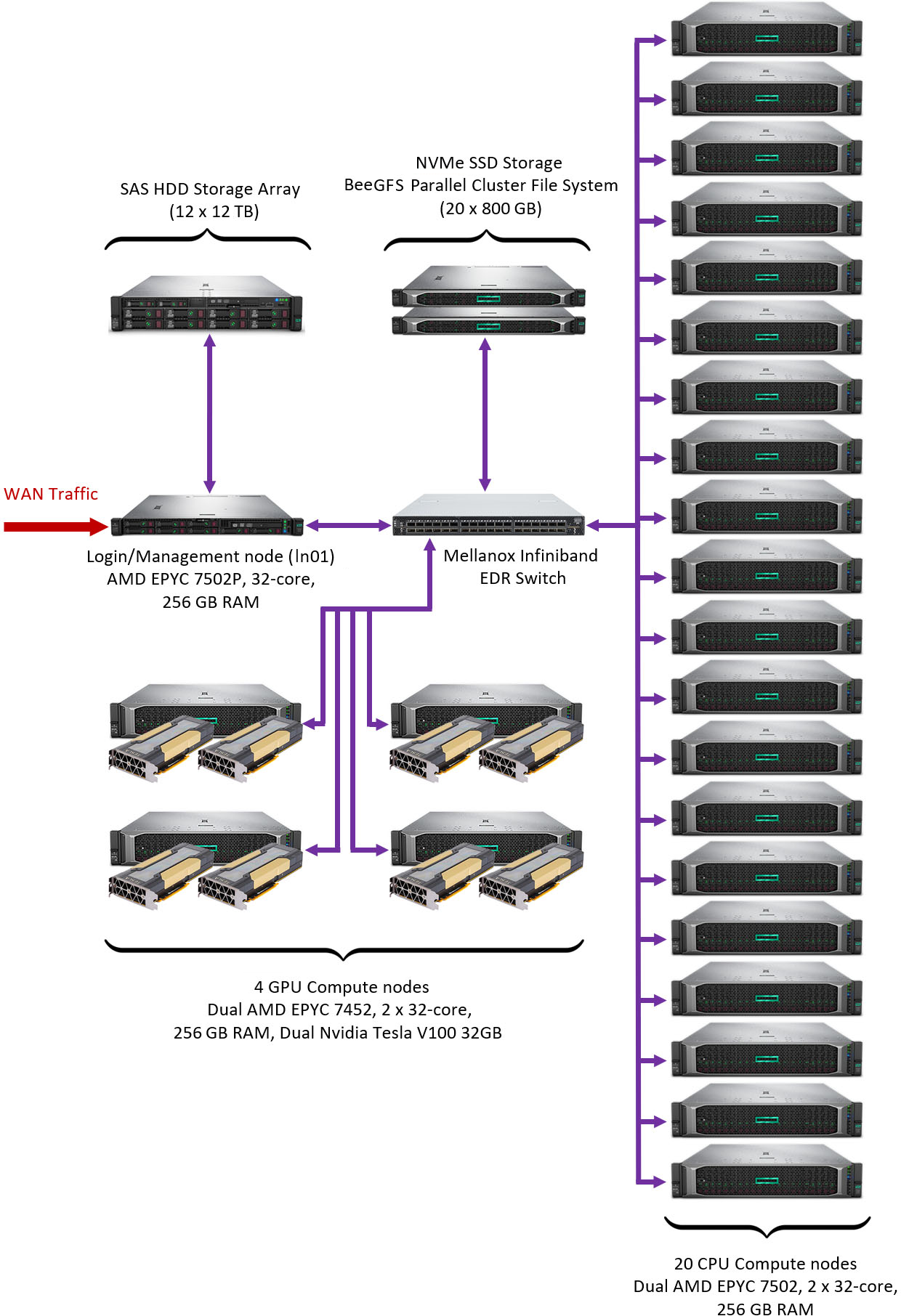

== Shabyt cluster == | |||

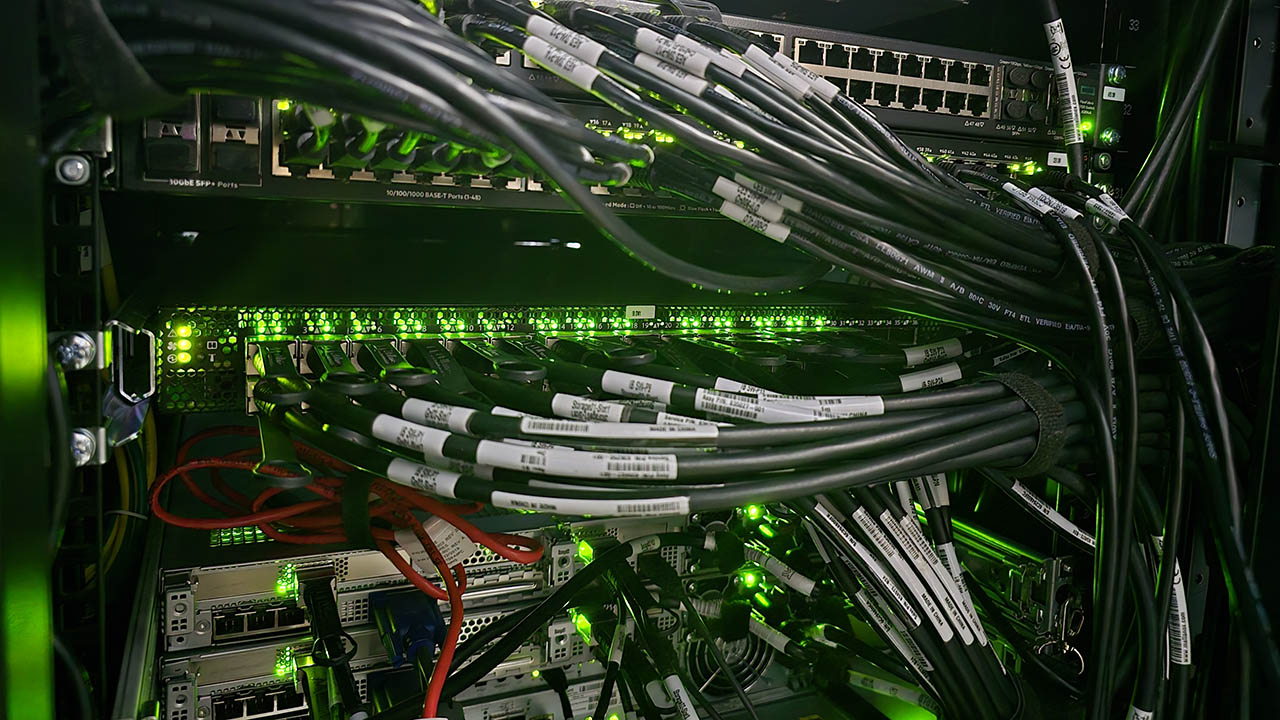

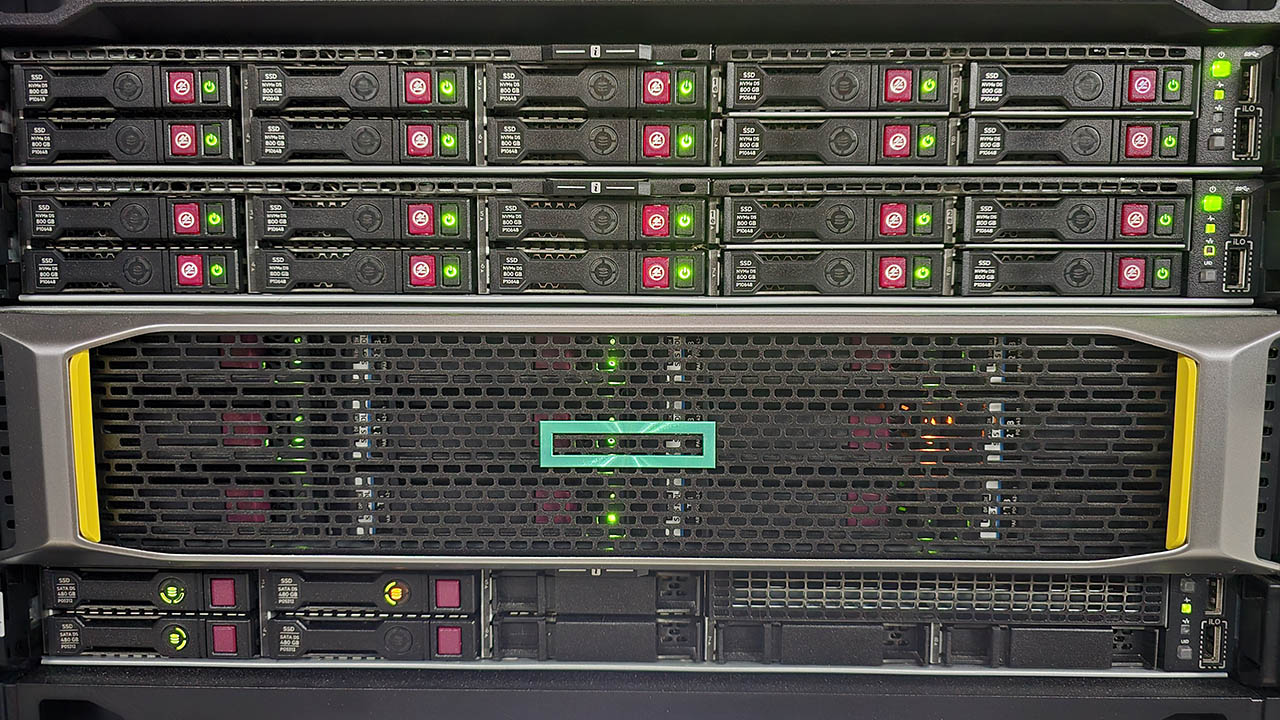

[[File:Shabyt_picture_3.jpg|420x420px|border]] [[File:Shabyt picture 1.jpg|420x420px|border]] [[File:Shabyt_picture_2.jpg|alt=|420x420px|border]] | |||

The Shabyt cluster is manufactured by Hewlett Packard Enterprise (HPE) and deployed in 2020. For several years it served as the primary platform for performing computational tasks by NU researchers. It has the following hardware configuration: | |||

*; 20 CPU compute nodes. Each CPU node features | |||

*: Two AMD EPYC 7502 CPUs (32 cores / 64 threads, 2.5 GHz Base) | |||

*: 256 GB DDR4-2933 RAM (8-channel) | |||

*: Infiniband EDR 100 Gbps network adapter | |||

*: Rocky Linux 8.10 | |||

*; 4 GPU compute nodes. Each GPU node features | |||

*: Two AMD EPYC 7452 CPUs (32 cores / 64 threads, 2.3 GHz Base) | |||

*: Two Nvidia V100 GPUs (32 GB HBM2) | |||

*: 256 GB DDR4-2933 RAM (8-channel) | |||

*: Infiniband EDR 100 Gbps network adapter | |||

*: Rocky Linux 8.10 | |||

*; 1 Interactive login node | |||

*: AMD EPYC 7502P CPU (32 cores / 64 threads, 2.5 GHz Base) | |||

*: 256 GB DDR4-2933 RAM (8-channel) | |||

*: Infiniband EDR 100 Gbps network adapter | |||

*: Rocky Linux 8.10 | |||

*; A storage system based on BeeGFS and consisting of two NVMe SSD storage servers in RAID 6 configuration for software and user home directories (/shared). The total capacity is 16 TB (raw), 9.9 TB (usable). Each storage server features | |||

*: AMD EPYC 7452 CPU (32 cores / 64 threads, 2.3 GHz Base) | |||

*: 128 GB DDR4-2933 RAM (8-channel) | |||

*: Two Infiniband EDR 100 Gbps network adapters (200 Gbps total) | |||

*: Rocky Linux 8.10 | |||

*; 144 TB (raw) HPE MSA 2050 SAS HDD Array in RAID 6 configuration for backups and large data storage for user groups (/zdisk) | |||

*; Mellanox Infiniband EDR v2 Managed switch (compute network) | |||

*: 36 ports (100 Gbps per port) | |||

*; HPE 5700 48G 4XG 2QSFP+ switch (application network) | |||

*: 48 ports (1 Gbps per port) | |||

*; Aruba 2540 48G 4SFP+ switch (management network) | |||

*: 48 ports (1 Gbps per port) | |||

The system is assembled in two racks and is physically located in NU data center in Block C2 | |||

{| class="wikitable" | |||

|+Shabyt cluster theoretical peak performance | |||

!Subsystem | |||

!FP8 | |||

!FP16 | |||

!FP32 | |||

!FP64 | |||

|- | |||

|CPUs (total) | |||

| | |||

| | |||

|121.7 TFLOPS | |||

|60.8 TFLOPS | |||

|- | |||

|GPUs (total) | |||

| | |||

|897.6 TFLOPS | |||

|112.2 TFLOPS | |||

|56.1 TFLOPS | |||

|} | |||

[[File:Shabyt racks.png|frameless|532x532px]] [[File:Shabyt_hardware_scheme.png|frameless|544x544px]] | |||

<br> | |||

== Muon cluster == | |||

[[File:Muon picture 1.jpg|420x420px|border]] [[File:Muon picture 3.jpg|420x420px|border]] [[File:Muon picture 2.jpg|420x420px|border]] | |||

Muon is an older cluster used by the faculty of Physics Department. It was manufactured by HPE and first deployed in 2017. It has the following hardware configuration: | Muon is an older cluster used by the faculty of Physics Department. It was manufactured by HPE and first deployed in 2017. It has the following hardware configuration: | ||

= Other facilities = | *; 10 CPU compute nodes. Each CPU node features | ||

There are several other computational facilities | *: Intel Xeon CPU E5-2690v4 (14 cores / 28 threads, 2600 MHz Base) | ||

{| style=" | *: 64 GB DDR4-2400 RAM (4-channel) | ||

*: 1 Gbps Ethernet network adapter | |||

*: Rocky Linux 8.10 | |||

*; Interactive login node | |||

*: Intel Xeon CPU E5-2640v4 (10 cores / 20 threads, 2400 MHz Base) | |||

*: 64 GB DDR4-2400 RAM (4-channel) | |||

*: 10 Gbps Ethernet network adapter (WAN traffic) | |||

*: 10 Gbps Ethernet network adapter (compute traffic) | |||

*: Rocky Linux 8.10 | |||

*; 2.84 TB (raw) SSD storage for software and user home directories (/shared) | |||

*; 7.2 TB (raw) HDD RAID 5 storage for backups and large data storage for user group (/zdisk) | |||

*; HPE 5800 Ethernet switch (compute network) | |||

The system is physically located in NU data center in Block 1. | |||

{| class="wikitable" | |||

|+Muon cluster theoretical peak performance | |||

!Subsystem | |||

!FP8 | |||

!FP16 | |||

!FP32 | |||

!FP64 | |||

|- | |||

|CPUs (total) | |||

| | |||

| | |||

|11.6 TFLOPS | |||

|5.8 TFLOPS | |||

|} | |||

<br> | |||

== Other facilities on campus == | |||

There are several other computational facilities at NU that are not managed by the NU HPC Team. Brief information about them is provided below. All inquiries regarding their use for research projects should be directed to the person responsible for each facility. | |||

{| class="wikitable" style="float: left; margin: auto" | |||

|+ | |||

|- | |- | ||

! | ! Cluster name !! Short description !! Contact details | ||

! | |||

! | |||

|- | |- | ||

| High-performance bioinformatics cluster "Q-Symphony" | | High-performance bioinformatics cluster "Q-Symphony" || | ||

'''HPE Apollo R2600 Gen10 cluster'''<br> | |||

Compute nodes: 8 nodes x dual Intel Xeon Gold 6226R (16 cores / 32 threads, 3.3 GHz Base), 512 GB DDR4-2933 RAM per node <br> | |||

Storage: 1.3 PB (raw) HDD storage HPE D6020 <br> | |||

Interconnect: Infiniband FDR<br> | |||

OS: RedHat Linux <br> | |||

This cluster is optimized for bioinformatics research and big genomics datasets analysis <br> | |||

| Ulykbek Kairov<br>Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana<br>Email: ulykbek.kairov@nu.edu.kz | | Ulykbek Kairov<br>Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana<br>Email: ulykbek.kairov@nu.edu.kz | ||

|- | |- | ||

| | |Computational resources for AI infrastructure at NU || | ||

'''NVIDIA DGX-1 (1 unit)''' <br> | |||

CPU: dual Intel Xeon ES-2698v4 (20 cores / 40 threads, 2.2GHz Base), 512 GB DDR4 RAM <br> | |||

GPUs: 8 x NVIDIA Tesla V100 <br> | |||

GPU Memory: 8 x 32 GB HBM2 <br> | |||

Storage 4 x 1.92 TB SSD in RAID0 <br> | |||

OS: Ubuntu Linux <br> | |||

| Yerbol Absalyamov<br>Technical Project Coordinator, | '''NVIDIA DGX-2 (2 units)''' <br> | ||

CPU: dual Intel Xeon Platinum 8168 (24 cores / 48 threads, 2.7 GHz Base), 512 GB DDR4-2133 RAM <br> | |||

GPUs: 16 x NVIDIA Tesla V100 <br> | |||

GPU Memory: 16 x 32 GB HBM2 <br> | |||

Storage: 30.72 TB NVMe SSD <br> | |||

OS: Ubuntu Linux <br> | |||

'''DGX A100 (4 units)''' <br> | |||

CPU: dual AMD EPYC Rome 7742 (64 cores / 128 threads, 2.25 GHz Base), 512 GB DDR4 RAM <br> | |||

GPUs: 8 x NVIDIA A100 <br> | |||

GPU: Memory 8 x 40 GB HBM2 <br> | |||

Storage: 15 TB NVMe SSD<br> | |||

OS: Ubuntu Linux <br> | |||

|| Yerbol Absalyamov<br>Technical Project Coordinator, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: yerbol.absalyamov@nu.edu.kz<br>Makat Tlebaliyev<br>Computer Engineer, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: makat.tlebaliyev@nu.edu.kz | |||

|} | |} | ||

__FORCETOC__ | |||

Latest revision as of 13:04, 1 October 2025

Nazarbayev University High Performance Computing team currently operates three main facilities - Irgetas, Shabyt, and Muon. Below we provide a brief overview of them.

Irgetas cluster

The Irgetas cluster is NU's most advanced computational facility on campus. It was deployed in September 2025 and features high compute density and efficiency enabled by direct liquid cooling. Manufactured by Hewlett Packard Enterprise (HPE), it has the following configuration:

- 6 GPU compute nodes. Each GPU node features

- Two AMD EPYC 9654 CPUs (96 cores / 192 threads, 2.4 GHz Base)

- Four Nvidia H100 SMX5 GPUs (80 GB HBM3)

- 768 GB DDR5-4800 RAM (12-channel)

- 1.92 TB local SSD scratch storage

- Two Infiniband NDR 400 Gbps network adapters (800 Gbps total)

- 25 Gbps SPF28 Ethernet network adapter

- Rocky Linux 9.6

- 10 CPU compute nodes. Each CPU node features

- Two AMD EPYC 9684X CPUs (96 cores / 192 threads, 2.55 GHz Base, 1152 MB 3D V-Cache)

- 384 GB DDR5-4800 RAM (12-channel)

- 1.92 TB local SSD scratch storage

- Infiniband NDR 200 Gbps network adapter

- 25 Gbps SPF28 Ethernet network adapter

- Rocky Linux 9.6

- 1 Interactive login node

- AMD EPYC 9684X (96 cores / 192 threads, 2.55 GHz Base, 1152 MB 3D V-Cache)

- 192 GB DDR5-4800 RAM (12-channel)

- 7.68 TB local SSD scratch storage

- Infiniband NDR 200 Gbps network adapter

- 25 Gbps SPF28 Ethernet network adapter

- Rocky Linux 9.6

- 1 Management node

- AMD EPYC 9354 CPUs (32 cores / 64 threads, 3.25 GHz Base)

- 256 GB DDR5-4800 RAM (8-channel)

- 15.36 TB local SSD storage

- 25 Gbps SPF28 Ethernet network adapter

- Rocky Linux 9.6

- NVMe SSD storage server for software and user home directories (/shared)

- Two AMD EPYC 9354 CPUs (32 cores / 64 threads, 3.25 GHz Base)

- 768 GB DDR5-4800 RAM (12-channel)

- 122 TB total raw capacity

- 92 TB total usable space in RAID 6 configuration

- Sustained sequential read speed from compute nodes > 80 GBps

- Sustained sequential write speed from compute nodes > 20 GBps

- Two Infiniband NDR 400 Gbps network adapters (800 Gbps total)

- 25 Gbps SPF28 Ethernet network adapter

- Rocky Linux 9.6

- Nvidia Infiniband NDR Quantum-2 QM9700 managed switch (compute network)

- 64 ports (400 Gbps per port)

- HPE Aruba Networking CX 8325‑48Y8C 25G SFP/SFP+/SFP28 switch (application network)

- 48 ports (SFP28, 25 Gbps per port)

- HPE Aruba Networking 2930F 48G 4SFP+ switch (management network)

- 48 ports (1 Gbps per port)

- HPE Cray XD Direct liquid cooling system

- HPE Cray XD 75kW 208V FIO In-Rack Coolant Distribution Unit

- Three-chiller setup with BlueBox ZETA Rev HE FC 3.2

The system is assembled in a single rack and physically located in NU data center in Block 1.

| Subsystem | FP8 | FP16 | FP32 | FP64 |

|---|---|---|---|---|

| CPUs (total) | 245.0 TFLOPS | 122.5 TFLOPS | ||

| GPUs (total) | 47,492 TFLOPS | 23,746 TFLOPS | 1,606 TFLOPS | 803 TFLOPS |

Shabyt cluster

The Shabyt cluster is manufactured by Hewlett Packard Enterprise (HPE) and deployed in 2020. For several years it served as the primary platform for performing computational tasks by NU researchers. It has the following hardware configuration:

- 20 CPU compute nodes. Each CPU node features

- Two AMD EPYC 7502 CPUs (32 cores / 64 threads, 2.5 GHz Base)

- 256 GB DDR4-2933 RAM (8-channel)

- Infiniband EDR 100 Gbps network adapter

- Rocky Linux 8.10

- 4 GPU compute nodes. Each GPU node features

- Two AMD EPYC 7452 CPUs (32 cores / 64 threads, 2.3 GHz Base)

- Two Nvidia V100 GPUs (32 GB HBM2)

- 256 GB DDR4-2933 RAM (8-channel)

- Infiniband EDR 100 Gbps network adapter

- Rocky Linux 8.10

- 1 Interactive login node

- AMD EPYC 7502P CPU (32 cores / 64 threads, 2.5 GHz Base)

- 256 GB DDR4-2933 RAM (8-channel)

- Infiniband EDR 100 Gbps network adapter

- Rocky Linux 8.10

- A storage system based on BeeGFS and consisting of two NVMe SSD storage servers in RAID 6 configuration for software and user home directories (/shared). The total capacity is 16 TB (raw), 9.9 TB (usable). Each storage server features

- AMD EPYC 7452 CPU (32 cores / 64 threads, 2.3 GHz Base)

- 128 GB DDR4-2933 RAM (8-channel)

- Two Infiniband EDR 100 Gbps network adapters (200 Gbps total)

- Rocky Linux 8.10

- 144 TB (raw) HPE MSA 2050 SAS HDD Array in RAID 6 configuration for backups and large data storage for user groups (/zdisk)

- Mellanox Infiniband EDR v2 Managed switch (compute network)

- 36 ports (100 Gbps per port)

- HPE 5700 48G 4XG 2QSFP+ switch (application network)

- 48 ports (1 Gbps per port)

- Aruba 2540 48G 4SFP+ switch (management network)

- 48 ports (1 Gbps per port)

The system is assembled in two racks and is physically located in NU data center in Block C2

| Subsystem | FP8 | FP16 | FP32 | FP64 |

|---|---|---|---|---|

| CPUs (total) | 121.7 TFLOPS | 60.8 TFLOPS | ||

| GPUs (total) | 897.6 TFLOPS | 112.2 TFLOPS | 56.1 TFLOPS |

Muon cluster

Muon is an older cluster used by the faculty of Physics Department. It was manufactured by HPE and first deployed in 2017. It has the following hardware configuration:

- 10 CPU compute nodes. Each CPU node features

- Intel Xeon CPU E5-2690v4 (14 cores / 28 threads, 2600 MHz Base)

- 64 GB DDR4-2400 RAM (4-channel)

- 1 Gbps Ethernet network adapter

- Rocky Linux 8.10

- Interactive login node

- Intel Xeon CPU E5-2640v4 (10 cores / 20 threads, 2400 MHz Base)

- 64 GB DDR4-2400 RAM (4-channel)

- 10 Gbps Ethernet network adapter (WAN traffic)

- 10 Gbps Ethernet network adapter (compute traffic)

- Rocky Linux 8.10

- 2.84 TB (raw) SSD storage for software and user home directories (/shared)

- 7.2 TB (raw) HDD RAID 5 storage for backups and large data storage for user group (/zdisk)

- HPE 5800 Ethernet switch (compute network)

The system is physically located in NU data center in Block 1.

| Subsystem | FP8 | FP16 | FP32 | FP64 |

|---|---|---|---|---|

| CPUs (total) | 11.6 TFLOPS | 5.8 TFLOPS |

Other facilities on campus

There are several other computational facilities at NU that are not managed by the NU HPC Team. Brief information about them is provided below. All inquiries regarding their use for research projects should be directed to the person responsible for each facility.

| Cluster name | Short description | Contact details |

|---|---|---|

| High-performance bioinformatics cluster "Q-Symphony" |

HPE Apollo R2600 Gen10 cluster |

Ulykbek Kairov Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana Email: ulykbek.kairov@nu.edu.kz |

| Computational resources for AI infrastructure at NU |

NVIDIA DGX-1 (1 unit) NVIDIA DGX-2 (2 units) DGX A100 (4 units) |

Yerbol Absalyamov Technical Project Coordinator, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University Email: yerbol.absalyamov@nu.edu.kz Makat Tlebaliyev Computer Engineer, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University Email: makat.tlebaliyev@nu.edu.kz |