Systems: Difference between revisions

No edit summary |

No edit summary |

||

| Line 38: | Line 38: | ||

| High-performance bioinformatics cluster "Q-Symphony" || '''HPE Apollo R2600 Gen10 cluster'''<br>Compute nodes: 8 x dual Intel Xeon Gold 6234 (8 cores / 16 threads, 3.3 GHz Base), 256 GB DDR4-2933 RAM per node | | High-performance bioinformatics cluster "Q-Symphony" || '''HPE Apollo R2600 Gen10 cluster'''<br>Compute nodes: 8 x dual Intel Xeon Gold 6234 (8 cores / 16 threads, 3.3 GHz Base), 256 GB DDR4-2933 RAM per node | ||

Storage: 560 TB (raw) HDD storage HPE D6020 | Storage: 560 TB (raw) HDD storage HPE D6020 | ||

OS: RedHat Linux. | OS: RedHat Linux. | ||

This cluster is optimized for bioinformatics research and big genomics datasets analysis. | |||

| Ulykbek Kairov<br>Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana<br>Email: ulykbek.kairov@nu.edu.kz | | Ulykbek Kairov<br>Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana<br>Email: ulykbek.kairov@nu.edu.kz | ||

|- | |- | ||

|Computational resources for AI infrastructure at NU || '''NVIDIA DGX-1 (1 unit)'''CPU: dual Intel Xeon ES-2698v4 (20 cores / 40 threads, 2.2GHz Base), 512 GB DDR4 RAM | |Computational resources for AI infrastructure at NU || '''NVIDIA DGX-1 (1 unit)'''CPU: dual Intel Xeon ES-2698v4 (20 cores / 40 threads, 2.2GHz Base), 512 GB DDR4 RAM | ||

GPUs: 8 x NVIDIA Tesla V100 | |||

GPU Memory: 8 x 32 GB HBM2 | |||

Storage 4 x 1.92 TB SSD in RAID0 | |||

OS: Ubuntu Linux | OS: Ubuntu Linux | ||

| Line 54: | Line 59: | ||

'''DGX A100 (4 units)''' | '''DGX A100 (4 units)''' | ||

CPU: dual AMD EPYC Rome 7742 (64 cores / 128 threads, 2.25 GHz Base), 512 GB DDR4 RAM<br>GPUs: 8 x NVIDIA A100 <br>GPU: Memory 8 x 40 GB | CPU: dual AMD EPYC Rome 7742 (64 cores / 128 threads, 2.25 GHz Base), 512 GB DDR4 RAM<br>GPUs: 8 x NVIDIA A100 <br>GPU: Memory 8 x 40 GB HBM2Storage: 15 TB NVMe SSD | ||

OS: Ubuntu Linux<br> | OS: Ubuntu Linux<br> | ||

|| Yerbol Absalyamov<br>Technical Project Coordinator, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: yerbol.absalyamov@nu.edu.kz<br>Makat Tlebaliyev<br>Computer Engineer, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: makat.tlebaliyev@nu.edu.kz | || Yerbol Absalyamov<br>Technical Project Coordinator, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: yerbol.absalyamov@nu.edu.kz<br>Makat Tlebaliyev<br>Computer Engineer, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: makat.tlebaliyev@nu.edu.kz | ||

|} | |} | ||

Revision as of 19:04, 23 May 2024

Nazarbayev University's Research Computing currently operates two main HPC facilities: Shabyt and Muon

Shabyt cluster

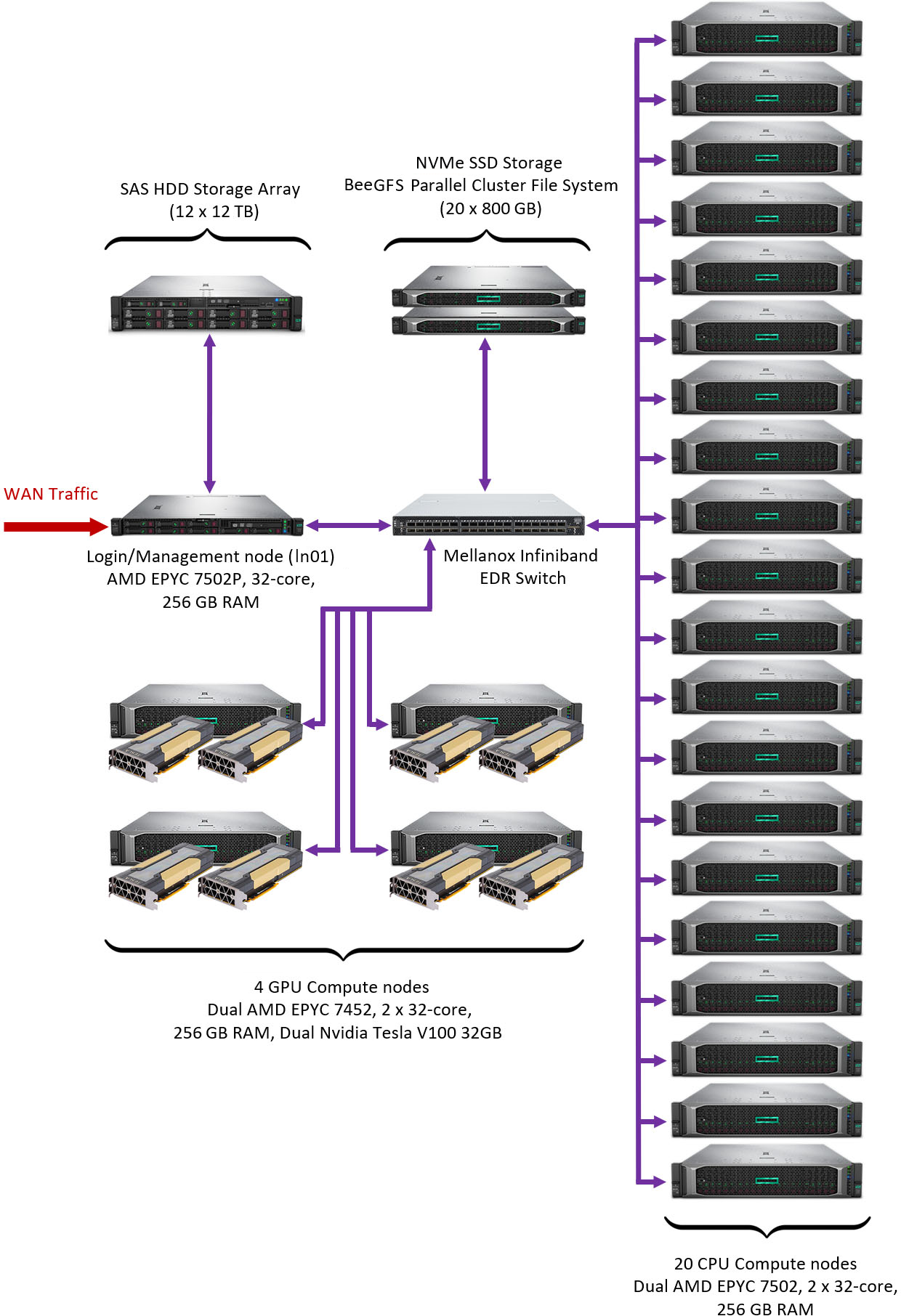

Shabyt cluster was manufactured by Hewlett Packard Enterprise (HPE) and deployed in 2020. It serves as the primary platform for performing computational tasks by NU and NLA researchers. It has the following hardware configuration:

- 20 Compute nodes with dual AMD EPYC 7502 CPUs (32 cores / 64 threads, 2.5 GHz Base), 256 GB 8-channel DDR4-2933 RAM, Rocky Linux 8.7

- 4 Compute nodes with dual AMD EPYC 7452 CPUs (32 cores / 64 threads, 2.3 GHz Base), 256 GB 8-channel DDR4-2933 RAM, dual Nvidia Tesla V100 GPUs 32GB HBM2 RAM, Rocky Linux 8.7

- 1 interactive login node with a single AMD EPYC 7502P CPU (32 cores / 64 threads, 2.5 MHz Base), 256 GB 8-channel DDR4-2933 RAM, Rocky Linux 8.7

- Mellanox Infiniband EDR 100 Gb/s interconnect in each node for compute traffic

- Mellanox Infiniband EDR v2 36P Managed switch (100 Gb/s per port)

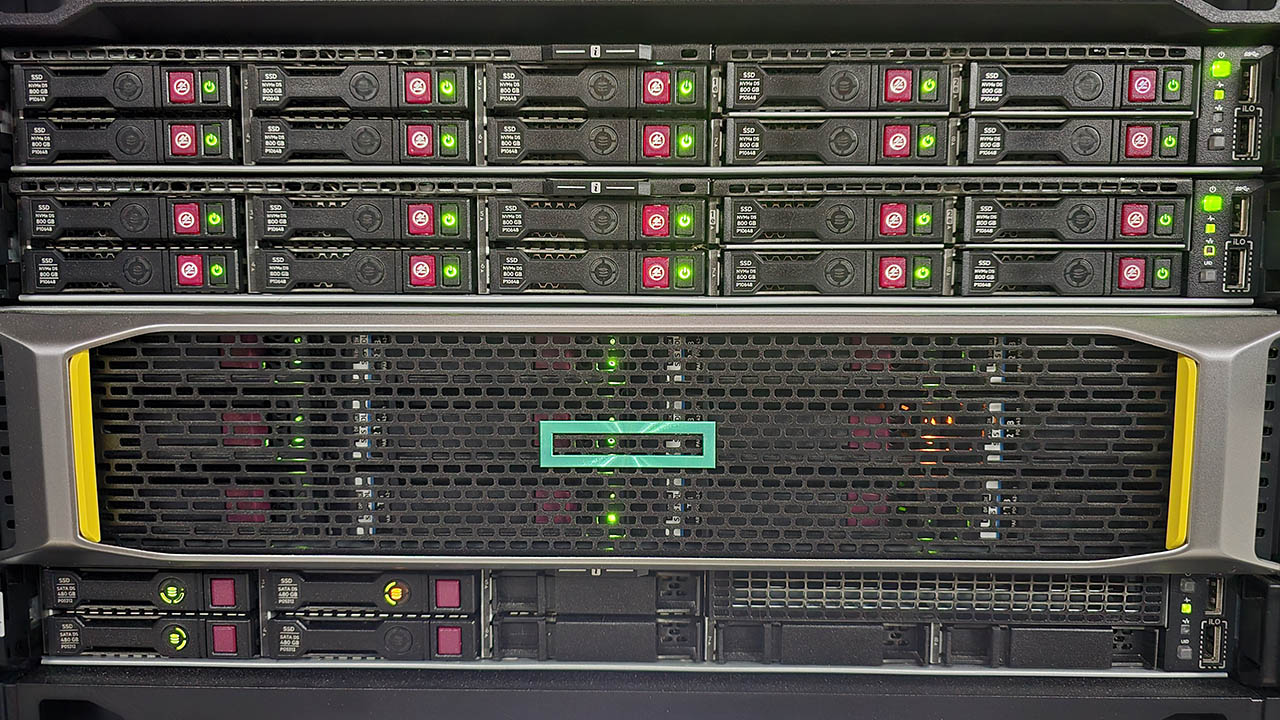

- 16 TB (raw) internal NVMe SSD Storage for software and user home directories (/shared)

- 144 TB (raw) HPE MSA 2050 SAS HDD Array for backups and large data storage for user groups (/zdisk)

- Theoretical peak CPU performance of the system is about 60 TFlops (double precision)

- Theoretical peak GPU performance of the system is about 65 TFlops (double precision)

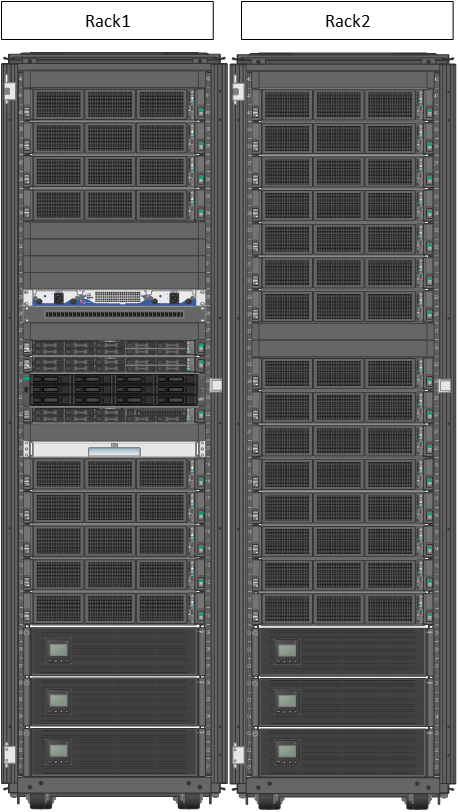

The system is assembled in two racks and is physically located at NU data center in Block C2

Muon cluster

Muon is an older cluster used by the faculty of Physics Department. It was manufactured by HPE and first deployed in 2017. It has the following hardware configuration:

- 10 Compute nodes with Intel Xeon CPU E5-2690v4 (14 cores / 28 threads, 2600 MHz Base), 64 GB 4-channel DDR4-2400 RAM, Rocky Linux 8.9

- 1 interactive login node with Intel Xeon CPU E5-2640v4 (10 cores / 20 threads, 2400 MHz Base), 64 GB 4-channel DDR4-2400 RAM, Rocky Linux 8.9

- 2 TB (raw) SSD storage for software user home directories (/shared)

- 4 TB (raw) HDD RAID 5 storage for backups and large data storage for user group (/zdisk)

- 1 Gb/s Ethernet network adapter in all nodes for compute traffic

- HPE 5800 Ethernet switch (1 Gb/s per port)

Other facilities

There are several other computational facilities available at NU that are not managed by NU HPC team. Below we provide brief information about them.

| Cluster name | Short description | Contact details |

|---|---|---|

| High-performance bioinformatics cluster "Q-Symphony" | HPE Apollo R2600 Gen10 cluster Compute nodes: 8 x dual Intel Xeon Gold 6234 (8 cores / 16 threads, 3.3 GHz Base), 256 GB DDR4-2933 RAM per node Storage: 560 TB (raw) HDD storage HPE D6020 OS: RedHat Linux. This cluster is optimized for bioinformatics research and big genomics datasets analysis. |

Ulykbek Kairov Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana Email: ulykbek.kairov@nu.edu.kz |

| Computational resources for AI infrastructure at NU | NVIDIA DGX-1 (1 unit)CPU: dual Intel Xeon ES-2698v4 (20 cores / 40 threads, 2.2GHz Base), 512 GB DDR4 RAM

GPUs: 8 x NVIDIA Tesla V100 GPU Memory: 8 x 32 GB HBM2 Storage 4 x 1.92 TB SSD in RAID0 OS: Ubuntu Linux

CPU: Dual Intel Xeon Platinum 8168 (24 cores / 48 threads, 2.7 GHz Base), 512 GB DDR4-2133 RAM OS: Ubuntu Linux

CPU: dual AMD EPYC Rome 7742 (64 cores / 128 threads, 2.25 GHz Base), 512 GB DDR4 RAM OS: Ubuntu Linux |

Yerbol Absalyamov Technical Project Coordinator, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University Email: yerbol.absalyamov@nu.edu.kz Makat Tlebaliyev Computer Engineer, Institute of Smart Systems and Artificial Intelligence, Nazarbayev University Email: makat.tlebaliyev@nu.edu.kz |