Systems 1: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

|||

| Line 35: | Line 35: | ||

| Yerbol Absalyamov<br>Technical Project Coordinator, Office of the Provost - Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: [[mailto:yerbol.absalyamov@nu.edu.kz|yerbol.absalyamov@nu.edu.kz]]<br>Makat Tlebaliyev<br>Computer Engineer, Office of the Provost - Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: [[mailto:makat.tlebaliyev@nu.edu.kz|makat.tlebaliyev@nu.edu.kz]] | | Yerbol Absalyamov<br>Technical Project Coordinator, Office of the Provost - Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: [[mailto:yerbol.absalyamov@nu.edu.kz|yerbol.absalyamov@nu.edu.kz]]<br>Makat Tlebaliyev<br>Computer Engineer, Office of the Provost - Institute of Smart Systems and Artificial Intelligence, Nazarbayev University<br>Email: [[mailto:makat.tlebaliyev@nu.edu.kz|makat.tlebaliyev@nu.edu.kz]] | ||

|} | |} | ||

Revision as of 14:20, 13 March 2024

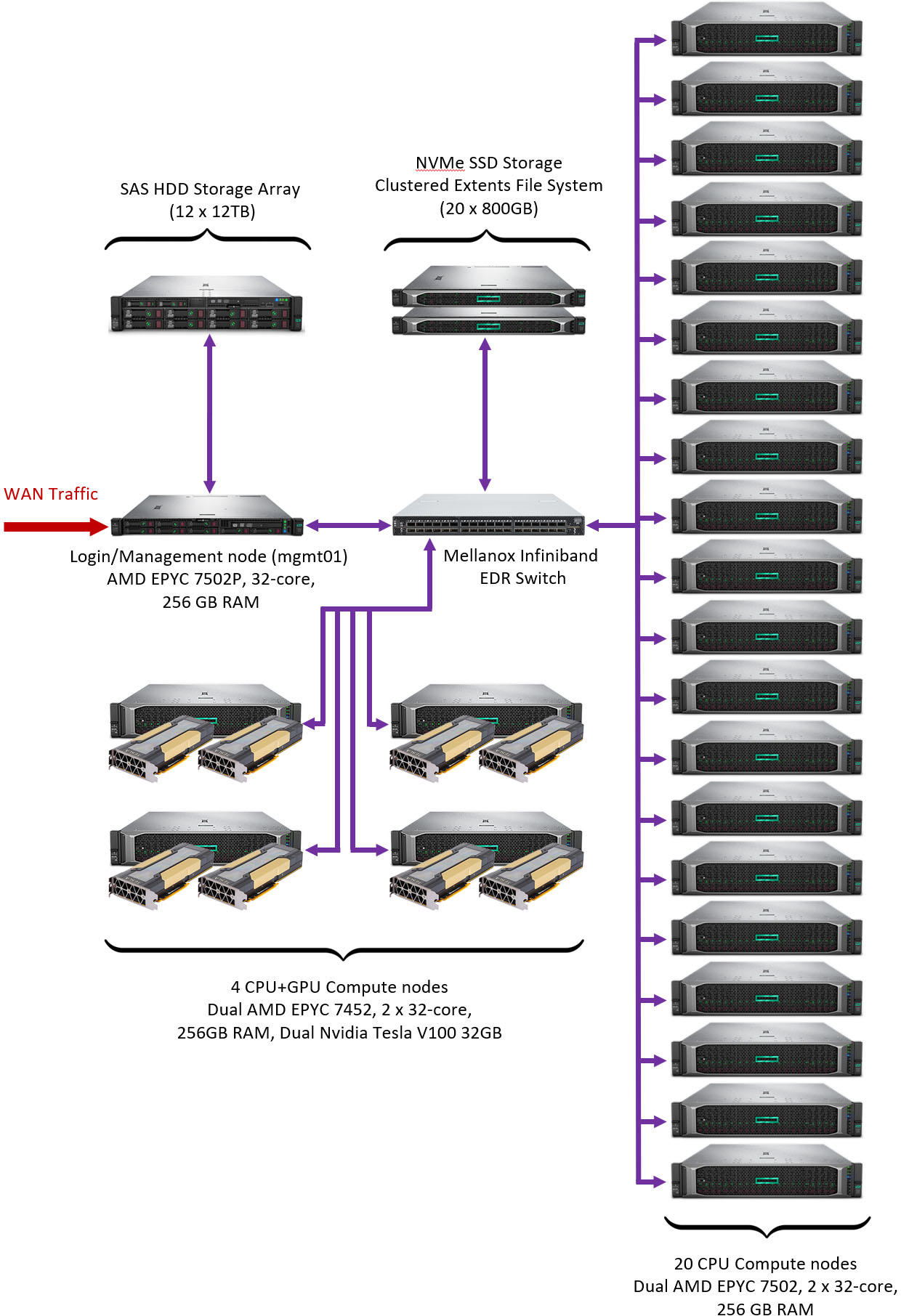

Key Features at a Glance:

- 20 Compute nodes with dual AMD EPYC 7502 CPUs (32 cores / 64 threads, 2.5 MHz Base), 256 GB 8-channel DDR4-2933 RAM, CentOS 7.9

- 4 Compute nodes with dual AMD EPYC 7452 CPUs (32 cores / 64 threads, 2.3 MHz Base), 256 GB 8-channel DDR4-2933 RAM, dual NVidia Tesla V100 GPUs 32GB HBM2 RAM, CentOS 7.9

- 1 interactive login node with AMD EPYC 7502P CPU (32 cores / 64 threads, 2.5 MHz Base), 256 GB 8-channel DDR4-2933 RAM, Red Hat Enterprise Linux 7.9

- EDR Infiniband 100 Gb/s interconnect for compute traffic

- 16TB internal NVMe SSD Storage (HPE Clustered Extents File System)

- 144TB HPE MSA 2050 SAS HDD Array

- Theoretical peak CPU performance of the system is 60 TFlops (double precision)

- Theoretical peak GPU performance of the system is 65 TFlops (double precision)

- SLURM job scheduler

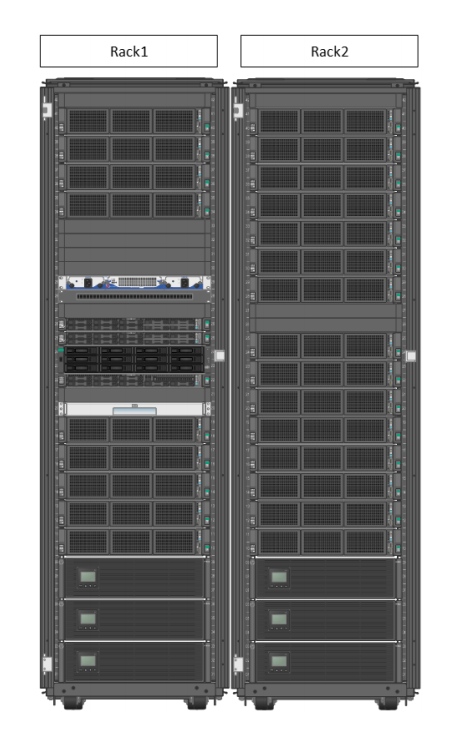

The system is assembled in a two-rack configuration and is physically located at NU Data Center

Other NU research computing clusters

| Cluster name | Short description | Contact details |

|---|---|---|

| High-performance bioinformatics cluster "Q-Symphony" | Hewlett-Packard Enterprise – Apollo (208 Cores x Intel Xeon, 3.26 TB RAM, 258 TB RAID HDD, RedHat Linux) Max computing performance: 7.5 TFlops Optimized for bioinformatics research and big genomics datasets analysis |

Ulykbek Kairov Head of Laboratory - Leading Researcher, Laboratory of bioinformatics and systems biology, Private Institution National Laboratory Astana Email: [[1]] |

| Intelligence-Cognition-Robotics | GPUs: 8X NVIDIA Tesla V100 Performance: 1 petaFLOPS GPU Memory: 256 GB total CPU: Dual 20-Core Intel Xeon, E5-2698 v4 2.2 GHz ... |

Zhandos Yessenbayev Senior Researcher, Laboratory of Computational Materials Science for Energy Application, Private Institution National Laboratory Astana Email: [[2]] |

| Computational resources for AI infrastructure at NU | NVIDIA DGX-1 (1 supercomputer): GPUs: 8 x NVIDIA Tesla V100 GPU Memory: 256 GB CPU: ... |

Yerbol Absalyamov Technical Project Coordinator, Office of the Provost - Institute of Smart Systems and Artificial Intelligence, Nazarbayev University Email: [[3]] Makat Tlebaliyev Computer Engineer, Office of the Provost - Institute of Smart Systems and Artificial Intelligence, Nazarbayev University Email: [[4]] |